What is Adversarial Machine Learning and it's Key Concepts?

Adversarial Machine Learning (AML) refers to the study and development of techniques in machine learning (ML) that focus on understanding and mitigating vulnerabilities and adversarial attacks on ML models. In traditional machine learning, models are trained and tested on assumed datasets, and the assumption is that the data used for testing and training comes from the same distribution. Adversarial Machine Learning explores scenarios where an adversary actively manipulates the input data to deceive the model's predictions.

Key concepts in Adversarial Machine Learning include:

Adversarial Attacks:

Adversarial attacks involve deliberately manipulating input data in a way that may be imperceptible to humans but can lead to misclassification or incorrect predictions by the machine learning model. These attacks aim to exploit vulnerabilities in the model's decision-making process.

Adversarial Examples:

Adversarial examples are specifically crafted inputs designed to mislead a machine learning model. These examples are generated by applying perturbations to legitimate input data, causing the model to make incorrect predictions.

Adversarial Training:

Adversarial training involves augmenting the training dataset with examples that are intentionally modified to be adversarial. By exposing the model to adversarial examples during training, it becomes more robust and better able to resist adversarial attacks.

Transferability:

Transferability refers to the phenomenon where an adversarial example created to deceive one machine learning model can also deceive other models trained on different architectures or datasets. Adversarial examples often exhibit transferability across models.

Defense Mechanisms:

Various defense mechanisms are employed to enhance the robustness of machine learning models against adversarial attacks. These may include adversarial training, input preprocessing, and the development of models that are inherently more resistant to adversarial manipulation.

White-Box and Black-Box Attacks:

In white-box attacks, adversaries have complete knowledge of the target model's architecture and parameters. In black-box attacks, adversaries lack detailed information about the model and must rely on trial-and-error methods to craft effective adversarial examples.

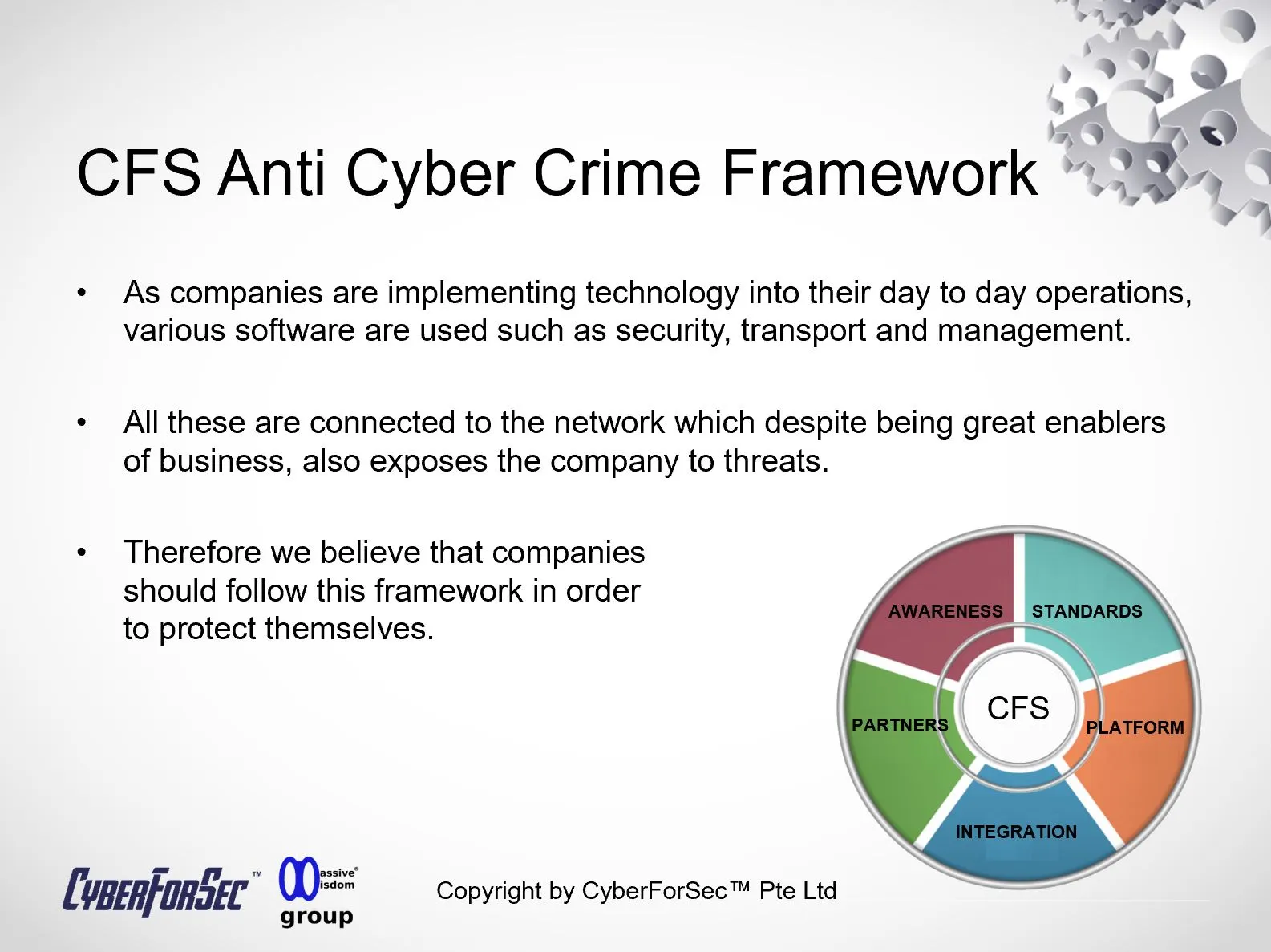

Adversarial Machine Learning has implications across various domains, including computer vision, natural language processing, and cybersecurity. Researchers and practitioners in AML work towards developing models that are more robust and resistant to adversarial manipulation, ensuring the reliability and security of machine learning systems in real-world applications.

Regards,

By Alvin Lam Wee Wah

and Team at CyberForSec.

Interested in what you've read and want to know more or collaborate with us? Contact us at customersuccess[a]cyberforsec.com replacing the [a] with @.